Accelerate computer vision training using GPU preprocessing with NVIDIA DALI on Amazon SageMaker | AWS Machine Learning Blog

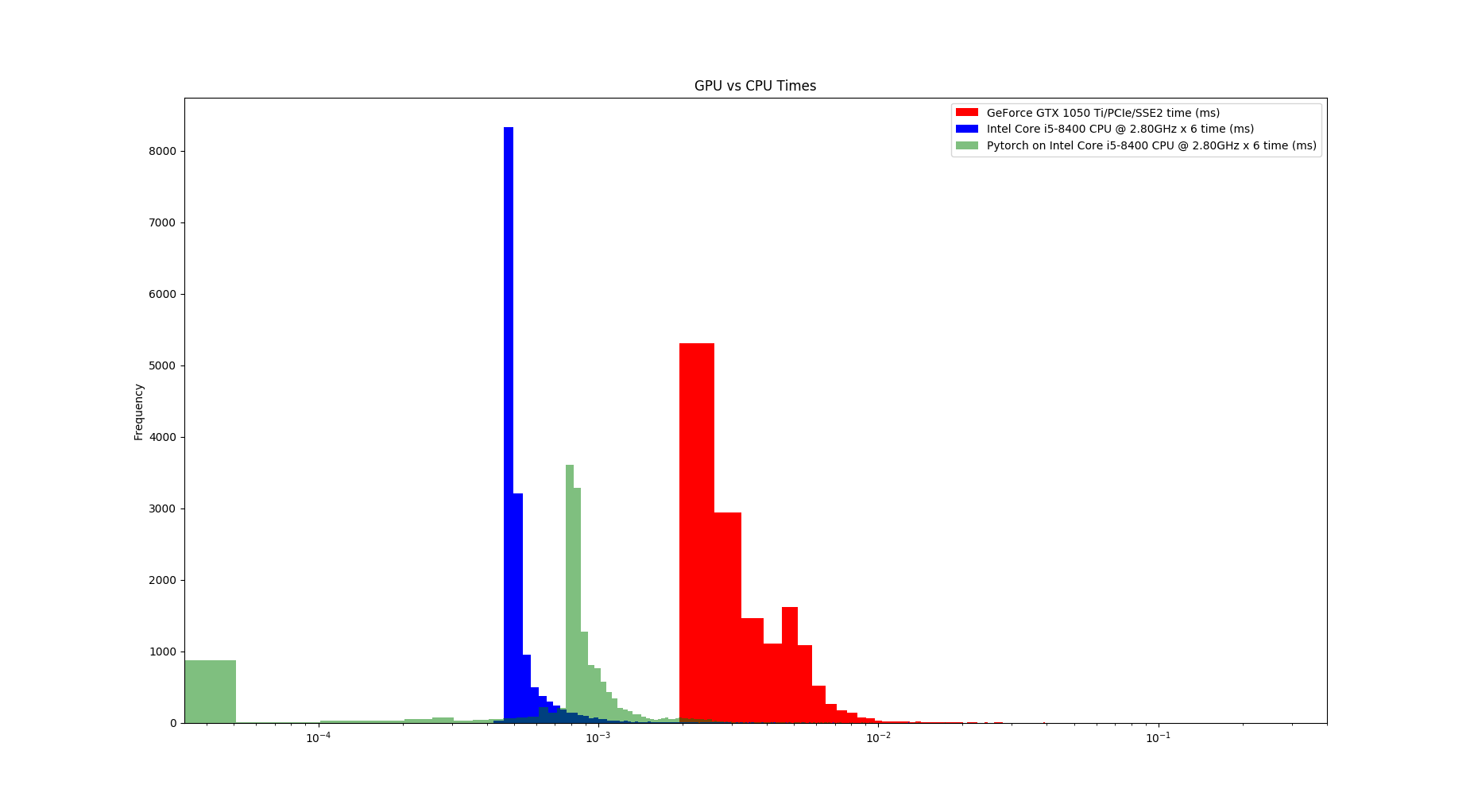

Use GPU in your PyTorch code. Recently I installed my gaming notebook… | by Marvin Wang, Min | AI³ | Theory, Practice, Business | Medium

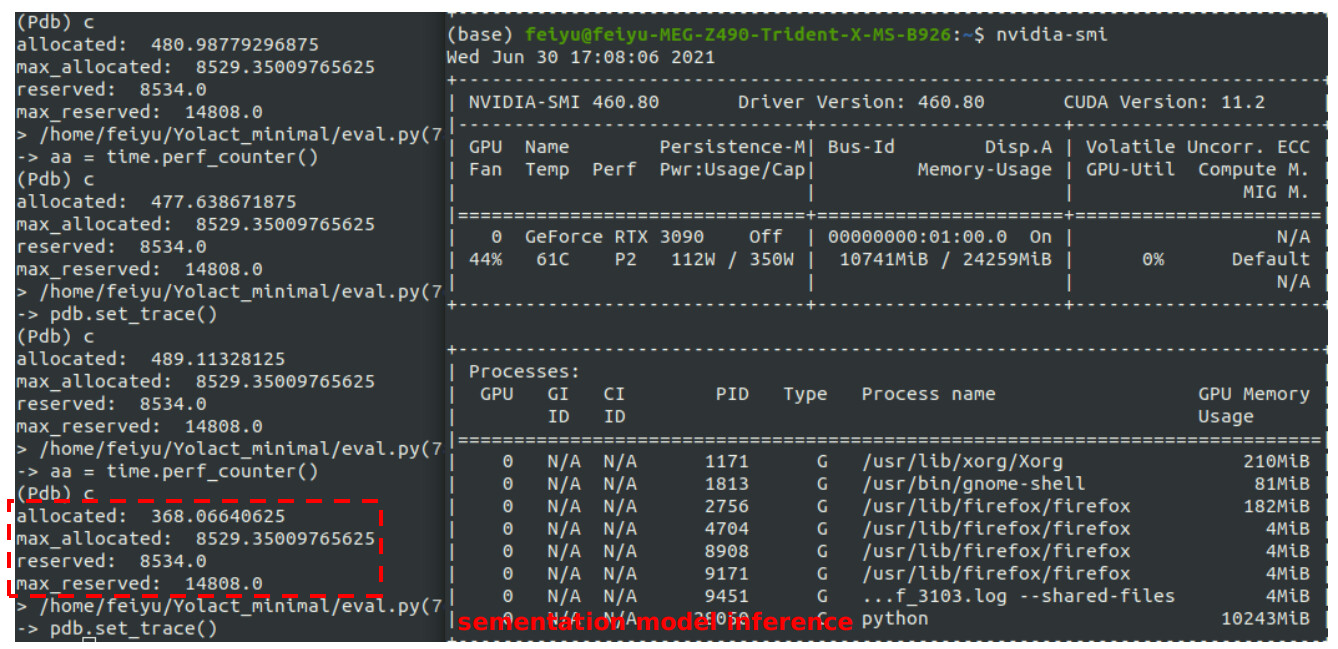

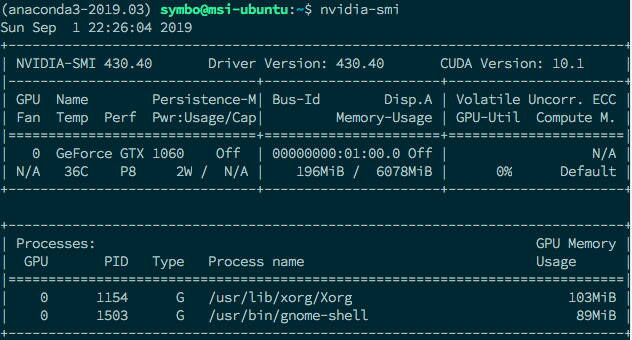

PyTorch GPU inference with Docker and Flask :: Päpper's Machine Learning Blog — This blog features state of the art applications in machine learning with a lot of PyTorch samples and deep

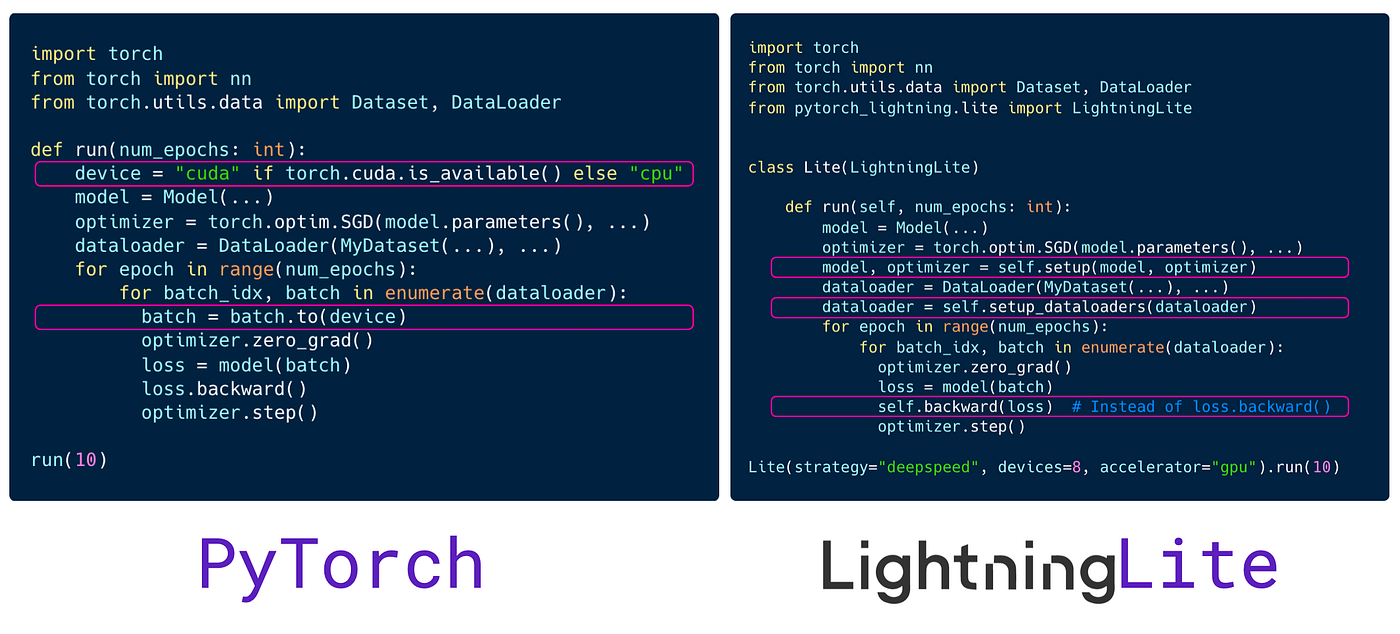

Scale your PyTorch code with LightningLite | by PyTorch Lightning team | PyTorch Lightning Developer Blog